Email Extraction, Transformation, and Loading into Graph Format

The emails are stored in EML format at addresses of the form $\texttt{https://wikileaks.org/dnc-emails/get/i}$ where $\texttt{i}$ is an integer between 1 and 22,456. The emails were downloaded one-by-one from WikiLeaks using the powerful tool $\texttt{curl}$. The downloader script I prepared is available on Github. It took about 6 hours to retrieve all 22,456 emails (1.7 GB).

The emails were parsed using the $\texttt{email.parser}$ package built into Python. Given a file object for an email, the $\texttt{HeaderParser}$ class returns the header data in key-value pairs. I extracted the sender, recipient(s), and subject line from the header of each email and then did some text preprocessing (e.g., converting letters to lower case, stripping white space) before writing this information into ASCII files (dnc_subject-out.tab, dnc_from-out.tab, dnc_to-out.tab) available on Github.

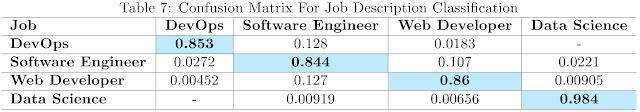

While the subject lines will not be used in the graph analysis, it is interesting to briefly consider them because they provide clues about the email contents. A wide variety of topics, including some surprising ones, are discussed. Figure 1 lists subject lines that caught my attention.

|

| Figure 1: A sample of subject lines in the DNC emails. |

The DNC emails are described on WikiLeaks as having come from seven email accounts. The numbers of unique email senders (1192) and recipients (1169) in this email dataset, however, are much larger than seven! The total number of unique identifiers among all senders and receivers is 1777. This includes two identifiers labeled as 'None' and 'undisclosed-recipients' representing one or more unknown email addresses. Some of the identifiers are phone numbers or generic labels like 'wireless caller'.

The details of email communication between all unique email addresses can be represented in an NxN matrix $M$ such that the value of $M_{ij}$ is the number of emails $i$ sent to $j$. If $j$ wrote back, then $M_{ji}>0$. Cells along the diagonal ($M_{ii}$) are positive and nonzero if people email themselves. $M$ intuitively translates into a directed graph. Every cell $M_{ij}$ represents a potential graph connection (or edge) from vertex $i$ to vertex $j$, which only exists if $M_{ij}>0$. If $M_{ji}>0$ also, then a parallel edge exists from vertex $j$ to vertex $i$.

For simplicity, I chose to represent the DNC email network as an undirected graph. This means an edge between vertices $i$ and $j$ exists if $M_{ij}>0$ or $M_{ji}>0$. I discarded the emails where no sender (N=144) or receiver (N=223) information was available (i.e., emails having 'None' or 'undisclosed-recipients' as the sender or recipient), which removed 26 vertices from the graph.

There are various graph analysis packages in Python (e.g., graph-tool, igraph, NetworkX). I chose igraph because it is simultaneously available in R as well as Python. The python-igraph interface is intuitive to use, and it is quite fast for my purposes.

Taking advantage of igraph support for vertex and edge attributes, I assigned each vertex a 'name' attribute to store an email address string. I also gave the edges an attribute ('dir') to represent one-way versus two-way communication and another attribute ('mag') to represent the number of emails exchanged. There are 1751 vertices and 3976 edges in the final graph, which is available on Github in GraphML format. One can immediately explore the DNC email network by importing this graph into his or her favorite graph analysis tool. My Python scripts on Github (mkGraph.py, analysis.py) demonstrate how to interact with the graph.

Graph Visualization and Community Analysis

The first step in appreciating the complexity of the DNC email network graph is visualization. Fortunately, igraph provides a powerful but straightforward plotting interface. With experimentation, I found force-directed algorithms produced the most aesthetically pleasing result. Figure 2 shows the graph as drawn with the Fruchterman Reingold force-directed layout. Each circle is a vertex representing a unique email address. Gray edges mean one-way email communication while orange edges indicate two-way communication. The center of the graph is crowded with many crossing edges. At intermediate radii are fan-like structures, or "communities", in which many nodes that share a common email connection are sparsely connected to outside nodes. At the outskirts are nodes that have only one email connection or that just email themselves, creating a loop.

Next, let's consider the basic properties of the vertices and edges. Vertex degree refers to the number of edges adjacent to a vertex (note, a loop adds 2 to vertex degree). The histogram of vertex degree has a steep drop at low degree and a long tail at higher degree. The majority of vertices (1127/1751, 64.4%) have degree$=1$. Significantly less (17.1%) have degree$=2$. About 9.7% have degree$\geq7$, and the top 5.0% have degree$\geq18$. The top three vertex degrees are 476 (comers@dnc.org), 471 (mirandal@dnc.org), and 274 (kaplanj@dnc.org).

Figure 2 gives the impression that most email communication is unidirectional. Indeed, 64.4% (2641/3976) of edges in Figure 2 are gray. Figure 3 gives insight into the number of emails typically exchanged (either way combined) between individuals. The majority of email exchanges are relatively brief. About 35.9% of graph edges signify the exchange of a single message. Approximately 66.9% of graph edges represent an exchange of $\leq4$ messages. Roughly 9.9% of edges correspond to email exchanges $\geq23$ messages. The top three email exchanges involve comers@dnc.org mailing himself (512 messages), mirandal@dnc.org and paustenbachm@dnc.org mailing each other (665 messages), and dncpress@dnc.org mailing itself (1492 messages).

|

| Figure 3: The cumulative fraction of graph edges as a function of emails sent per edge in the DNC email network graph. The bins are five emails per edge wide. |

Figure 4 is a similar plot showing how the cumulative fraction of confined edges increases with the maximum allowed vertex degree. The curve in Figure 4 rises smoothly initially but then begins to show step features corresponding to the community structures in Figure 2. The community structures arise because vertices of intermediate and high degree connect to many vertices that are in turn sparsely connected. A significant fraction (34.1%) of the graph's edges are connected to one or more of the five vertices with degree $\geq 125$. The legend of Figure 4 lists the persons associated with these most influential vertices, and the same five vertices are also distinguished in Figure 2 as being slightly enlarged and having vertex colors matching the lines in Figure 4.

While Figure 4 associates the most highly connected nodes with separate communities, a more sophisticated approach is required to find all communities and determine their member nodes. The study of community structure in graphs is an active field, and there are many algorithms implemented in igraph for this purpose. From the available algorithms in igraph, I have chosen the Louvain Modularity (LM) method. Modularity is a scalar measure of graph structure; positive modularity means vertices are more densely interconnected than expected by chance. LM partitions communities in a bottom-up, hierarchical way that maximizes the modularity of the graph. LM is suitable even for networks with over 100 million nodes, and it is fast and effective compared to competing algorithms.

The LM algorithm finds 47 communities in the DNC email network and reports a modularity of 0.6 (on a scale of [-1, 1]). Over half (36/47) of the communities have just one or two members, and these small communities are the isolated vertices in the graph outskirts. The other 11 communities have between 5 and 429 members. Figure 5 redraws the graph with color coding for the vertices in the 11 largest communities. As intuitively expected, the largest fan-like structures are separate communities. There are also multiple communities coexisting in the center of the graph where it was hard to see structure in Figure 2.

Table 1 lists the 11 largest communities in descending order of the number of community members. The second column names the person with the most email connections (i.e., highest vertex degree) in each community; these people may perhaps be considered as "community leaders", and they appear as enlarged nodes in Figure 5. The third column of Table 1 indicates the color of the community in Figure 5. The five vertices with degree $\geq125$ highlighted in Figure 4 turn out to be community leaders in Table 1. The number of nodes per community roughly, but not perfectly, increases with the vertex degree of the community leader.

This graph analysis has demonstrated how to find substructure in a graph and further how to identify the most prolific individuals within network communities.

Interactive Shiny Web Application

Shiny is a web application framework for the R programming language. It is powerful while still being simple to use, and its attractive widgets make for very nice looking applications. I have designed a Shiny application that provides interactive visualization of the DNC email network. This application incorporates the R version of igraph and uses the qgraph package for plotting. My approach was to prototype all the intended functionality with python-igraph first, because it is more intuitive to use, and then port it over to the R version. It is worth noting that the igraph R interface is slightly different than python-igraph. I also highly recommend qgraph over the R base graphics for plotting graphs.

The layout of the application consists of a sidebar panel with widgets and a main panel area where the graphs are drawn. The sidebar (Figure 6) consists of two drop-down menu ($\texttt{selectInput}$) widgets and one checkbox group ($\texttt{checkboxGroupInput}$) widget.

|

| Figure 6: The sidebar panel in the Shiny application contains drop-down menus and checkboxes that control three separate plots. |

Graph A shows the subgraph where all vertices have degree $\leq$ the value in the first drop-down menu (i.e., it's like an interactive representation of Figure 4). Gradually building up the graph by raising the value in the drop-down menu is useful for viewing small-scale structure that is obscured in the full graph. Similarly, Graph B shows the subgraph where all vertices have degree $\geq$ the value in the second drop-down menu; this serves to isolate the relationships between the most highly connected nodes. Graph C allows the user to select via checkboxes a subset of the 50 vertices with the highest degree. All email connections of the selected vertices are shown, allowing one the see the email network for specific individuals. Each subgraph plot is annotated with the number of vertices, edges, and the modularity. Figures 7a and 7b show examples of the graphs produced in the application.

|

| Figure 7a: Sample output from the R Shiny web application. Graph A shows vertices with degree $\leq 28$. Similarly, Graph B shows vertices with degree $\geq 274$. |

|

| Figure 7b: Graph C shows the email network of comers@dnc.org, allenz@dnc.org, houghtonk@dnc.org, and brinsterj@dnc.org. |

This application has been deployed on shinyapps.io. The source code is available on Github.

Summary

In this post, I have provided a simple analysis of the email network inferred from the leaked DNC emails. Transforming the sender-recipient information into a network graph is a straightforward process once the emails are in hand. Analyzing the substructure of the resulting graph reveals 11 communities within the DNC email network containing 5-429 individuals. Scrutinizing the community membership also reveals the most prolific participants, or leaders, within each community. Finally, I have demonstrated the utility of the R Shiny framework for data science web applications with an example that is sure to provide some amusement this election season.