Automating text classification is an exercise in machine learning. Given a training set of text documents with human annotated categories, text classification algorithms learn through adjusting weights on discriminative text features to minimize the training error. In general, this process is not fully automatic because human participation is needed to prepare and label the training data and to define the features used by the algorithms.

A variety of machine learning algorithms can be used for text classification (e.g., naive Bayes, logistic regression, support vector machine, k-nearest neighbors). The methods tend to differ in how the training errors are measured, and in how they combine features together (e.g., linearly versus non-linearly). Classification performance depends more on having good text features than on the details of the algorithm, so there is no clearly dominant classification algorithm.

In this post, I will discuss the naive Bayes algorithm and demonstrate its performance on classic literature and job advertisements. I find naive Bayes performs extremely accurately (>98% average accuracy) on passages from books. Classification of job advertisements works well (91% average accuracy for tech jobs) and has potential utility in human resource (HR) departments and on job aggregator websites.

The Naive Bayes Algorithm

Consider a document d having category $\theta_i$. Bayes theorem tells us the identity in Equation 1 is true. It says the probability of category $\theta_i$ given d, $p(\theta_i|d)$ (i.e., the posterior), is proportional to the probability of obtaining category $\theta_i$, $p(\theta_i)$ (i.e., the prior), times the likelihood function $p(d|\theta_i)$ of the document being generated given category $\theta_i$.

|

| Equation 1 |

Finding the correct category of a document is a matter of maximizing the posterior probability. Naive Bayes maximizes the product of $p(\theta_i) \times p(d | \theta_i)$. In contrast, discriminative classifiers like logistic regression, support vector machines, and k-nearest neighbors directly calculate the posterior.

Equation 2 further breaks down the naive Bayes algorithm. Making the naive assumption that words in documents are independently generated (which works well in many applications), the likelihood $p(d|\theta_i)$ can be rewritten in terms of a product over all words in the global document corpus vocabulary $\left( \prod_{w \in V} \, p(w|\theta_i)^{c(w,d)} \right)$. This introduces another conditional probability, $p(w|\theta_i)$, representing the probability that a word $w$ is drawn from category $\theta_i$. Finally, the logarithm of the posterior probability is used in practice to preserve numerical precision because the calculation involves the multiplication of many small probabilities.

|

| Equation 2 |

A naive Bayes classifier requires a training set in which documents are labeled with their

corresponding categories (Equation 3).

|

| Equation 3 |

From the training set, the algorithm calculates $p(\theta_i)$ and $p(w|\theta_i)$ for each category. The number of documents need not be the same in each category as $p(\theta_i)$ accounts for unequal category probabilities; $p(\theta_i)$ is equal to the number of documents in category i divided by the total number of documents in the training set (Equation 4).

|

| Equation 4 |

The text categories in naive Bayes are represented as bags of words in which the words are weighted by probability of appearance. These conditional word probabilities uniquely define each text category. Given the word probabilities for a category as calculated by naive Bayes, one could in principle generate documents in that category; this is why naive Bayes is considered a generative model. Equation 5 defines the conditional probability $p(w|\theta_i)$. The numerator is the count of the word in the training set for category i. The denominator represents the total number of words in all training set documents.

|

| Equation 5 |

The categories in text classification are analogous to the topic models in topic modeling because topic models are likewise represented with probability-weighted bags of words. Compared with topic modeling, however, text categorization with naive Bayes is a simpler problem. In topic modeling, the topic-specific conditional word probabilities and mixing weights for each document-topic combination are fitted parameters. In naive Bayes text categorization, only the conditional word probabilities for the k categories are tracked, and the categories are predefined in the training set.

Classifying Book Excerpts

In this section, I test how well the naive Bayes algorithm can match short excerpts of text with the books they come from. This test is simple in part because no manual classification is needed to construct the training set, yet it is versatile because the length of the excerpts is readily changeable. Using excerpts of longer word count reduces the training sample size, allowing study of how the tradeoff between excerpt length and training set size affects performance.

My test uses 10 classic novels plus one autobiography (1,000,000+ words total) from Project Gutenberg. I've used this dataset in my previous text mining projects, here and here. I run separate trials in which the books are each partitioned into excerpts of length 250, 400, or 550 words. These particular numbers were chosen to match the lengths of typical job advertisements (the 25th, 50th, and 75th percentiles of the job advertisements I use below are 276, 404, and 563 words, respectively). Table 1 shows the number of excerpts provided by each book for the different excerpt lengths. After the excerpts are created, they are converted to lower case letters, filtered for stop words, and words are trimmed with the Porter2 stemming algorithm.

Text classification is performed on all 11 books simultaneously with the implementation of naive Bayes in the open source MeTA (ModErn Text Analysis) toolkit. For each excerpt length (250, 400, and 550 words), a classifier is trained and tested with 5-fold cross-validation in which the input is equally divided into five folds. A classifier is trained on four parts and tested on the remaining one. This process iterates so that each separate fold is tested against a classifier trained on the other four. The average performance across all five iterations is a robust measure of classifier performance because every excerpt is used in the evaluation phase.

The results are surprisingly good for an excerpt length of 250 words, with an overall classification accuracy of 98.6% based on 4490 predictions. Accuracy alone, however, is not the only useful metric of machine learning performance. Table 2 shows the confusion matrix in which the rows represent the actual class of the excerpts while the columns represent the predicted class. Each row shows the fraction of excerpts from a given book that the classifier assigned to each possible category. Cells along the diagonal indicate the rate of true positives for each book, a metric referred to as recall in the context of machine learning. The recall for each book varies between 100% and 93.3%. The three books with the lowest recall are Dracula (97.7%), Strange Case of Dr. Jekyll and Mr. Hyde (94.2%), and The Adventures of Tom Sawyer (93.3%).

Excerpts from Dracula are confused with several books including Alice in Wonderland (0.15%, 1/657), Autobiography of Benjamin Franklin (0.46%, 3/657), Emma (0.15%, 1/657), Great Expectations (0.30%, 2/657), Grimms' Fairy Tales (0.15%, 1/657), Strange Case of Dr. Jekyll and Mr. Hyde (0.15%, 1/657), and The Adventures of Sherlock Holmes (0.91%, 6/657). In contrast, excerpts from Strange Case of Dr. Jekyll and Mr. Hyde are confused only with The Adventures of Sherlock Holmes at a rate of 5.8% (6/104).

Excerpts from The Adventures of Tom Sawyer are mistaken for Frankenstein (1.3%, 4/298), Great Expectations (0.03%, 1/298), and Adventures of Huckleberry Finn (5.0%, 15/298). It is perhaps not surprising the algorithm has difficulty separating the two Mark Twain novels given they share overlapping characters, vocabularies, and writing styles. However, the confusion is one-sided in that only 0.8% (4/471) of excerpts from Adventures of Huckleberry Finn are misidentified as belonging to The Adventures of Tom Sawyer. This is probably because the Adventures of Huckleberry Finn category, being based on a longer book (471 versus 298 equal-size excerpts), has a higher probability of occurrence than the The Adventures of Tom Sawyer category.

Precision, the fraction of correct output category declarations, is another useful metric to describe classification performance. Whereas recall is normalized in terms of the true number belonging to a category, precision is normalized in terms of the number of times a category was selected. High precision means few false positives, and vice versa. Table 3 shows the precision (and recall for comparison) for excerpt length 250 words. Precision and recall are similar in most cases. The Adventures of Tom Sawyer has the largest disparity in precision and recall (98.6% versus 93.3%). The recall is lower because parts of The Adventures of Tom Sawyer are confused with other books, particularly Adventures of Huckleberry Finn. In turn, Adventures of Huckleberry Finn has relatively low (96.9%) precision because of the false positives from The Adventures of Tom Sawyer.

Increasing the excerpt word count to 400 raises the overall classification accuracy (to 99.3% for 2812 predictions) and improves the precision and recall for each book. This performance improvement occurs despite there being fewer excerpts in each category (Table 1). Note, the number of words presented to the classifier for each book is not changing. The books are still being presented in full to the classifier, just in fewer, larger chunks. Likewise, the classifier is still being trained on 80% of the book and is being tested on the other 20% (via 5-fold cross-validation). The extra words per excerpt apparently give the algorithm a stronger hint of the correct category.

Performance is better still when the excerpt word count is 550. The overall accuracy is 99.5% on 2045 predictions. The precision and recall in each category (Table 4) are better than 95%, and three books have both precision and recall equal to 100%. Some confusion between The Adventures of Tom Sawyer and Adventures of Huckleberry Finn still exists, however.

Text classification works very well on book excerpts. The 11 books were written by 10 different authors. Each author has a unique writing style and vocabulary, and naive Bayes seems able distinguish these differences with the $p(w|\theta_i)$ conditional probabilities it calculates.

Classifying Job Advertisements

Here, I apply naive Bayes text classification to the more challenging, and practical, scenario of job advertisement classification. A job advertisement (or posting) should sell the company and position to a qualified job seeker. Ideally for hiring managers, the advertisements are sexier than the dryer, more technical job descriptions maintained internally by HR, although many job postings are uninspired. Of concern is that some jobs seem to have arbitrary or silly job titles, and certain job titles are used inconsistently by companies (e.g., software engineer versus web developer). Given that these problems are bound to be reflected in job advertisements, I want to know whether naive Bayes can learn the difference between various job roles from typical job postings available on the web. If so, there are sure to be applications within HR departments and on job aggregator websites.

I assemble job advertisements relevant to four job categories: DevOps (a relatively new job role that intersects traditional software development, quality assurance, and information technology operations roles), software engineering, web development, and data science. In principle, these roles imply different expertise. In practice, however, jobs in these categories can share some overlapping technical skills (e.g., programming languages, databases, user interface development). For example, web development can involve building the user interfaces of websites, software engineering can involve the user interfaces of desktop or mobile applications, and data science can involve creating

dashboards. Additionally, some software engineering applications incorporate tools also used by data scientists, like machine learning algorithms, databases, and Hadoop. Commonalities like these blur the boundaries between different job roles and potentially make job classification less certain. I deliberately built my case study to be challenging in this way.

Job postings in the four categories were retrieved from a job aggregator service. Specifically, I scraped the results of separate searches for "software engineer", "web developer", "DevOps", and "data scientist" in various major cities. From the accumulated results, I inspected the job titles for relevance and manually removed nonrelevant job postings. Duplicates in the remaining set of viable jobs were identified and removed. This is an important step to prevent copies of the same postings from simultaneously appearing in the training and test datasets. Collapsing the job postings into vector counts of words allowed duplicates to be efficiently identified with vector operations.

Before running the classifier, I enforced specific criteria on the postings to ensure the separate categories were consistently defined. The words "DevOps" and "web" were required to appear in the job titles of postings in the "DevOps" and "Web Developer" classes, respectively. Likewise, job titles in the "Data Science" category all include the word "data", and almost all have the word "scientist" too (the remaining few say "engineer" or "analyst" in lieu of "scientist"). Software engineering is a more diverse field, and the related titles are not as uniform as in the previous three categories. In addition to titles specifying "software engineer", I included in the "Software Engineer" group titles like "software developer", "Java developer", "C++ coding", "general programmer", "game programmer", and "mobile development evangelist", among others. Postings with titles that obviously prioritized web development (e.g., full stack web developer) were not allowed. All jobs specializing in software quality assurance were excluded to further restrict the scope of the Software Engineer category. Application of these criteria yielded 111 to 764 jobs per category. Table 5 shows the final number of postings in each category.

As a sanity check on the consistency of my label annotations, I ran the text classifier on the job titles. Classification was performed as in the previous section using five-fold cross-validation. The confusion matrix in Table 6 indicates the classifications are 100% perfect, implying my job-labeling scheme is self-consistent. Classification of job descriptions is not expected to work so perfectly, but this test shows the labeling done by me cannot be a source of error.

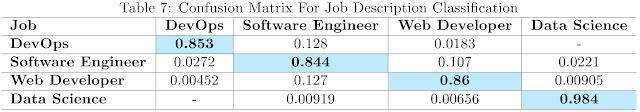

Classification of the job description portion of the advertisements yields an overall accuracy of 91%, based on 1680 predictions. This is encouraging considering I chose a hard scenario (tech jobs). Table 7 shows the confusion matrix. The recall for the DevOps, Software Engineer, and Web Developer categories ranges from 84.4% to 86%. In contrast, recall in the Data Science category is 98.4% because this category is more easily distinguishable from the others. About 12.8% (14/111) of DevOps jobs were confused for Software Engineer jobs, but only 1.8% (2/111) were confused for Web Developer jobs. Software Engineer jobs were called DevOps, Web Developer, and Data Science jobs at rates of 2.7% (16/590), 10.7% (63/590), and 2.2% (13/590), respectively. Few Web Developer jobs were considered DevOps (0.45%, 1/222) or Data Science (0.9%, 2/222), yet 12.7% (28/222) were designated Software Engineer. Among Data Science jobs, 0.92% (7/764) were classified as Software Engineer, and 0.66% (5/764) were deemed Web Developer.

Table 8 shows the precision (with recall for comparison) in each category. Precision is comparable to recall in the DevOps (~85%) and Data Science (~98%) classes. There is a disparity, however, for Software Engineer and Web Developer. Software Engineer has precision greater than recall (0.91 versus 0.84). A classification of Software Engineer has a 91% chance of being correct, yet, due in large part to confusion with the Web Developer class, classification is only correctly identifying 84.4% of all Software Engineer jobs. Web Developer has rather low precision (0.73) because of the number (63) of Software Engineer jobs being called Web Developer is a sizeable fraction of the number of correct Web Developer predictions (191).

In this experiment, the confusion between DevOps, Software Engineer, and Web Developer jobs, related roles with overlapping skills, is of order 10%. One component of this error could be mismatches between the job descriptions and their provided job titles, the latter of which I used as the basis for my own labeling. Reinspection of the misclassified job descriptions may indicate that, in some cases, the predicted classification is actually more appropriate for the job description than the one I gave given the provided job title. In that case, the error rate of this job classification experiment is lower than stated. I do not have the expertise to assess this possibility, however.

Conclusion

In this post, I have applied naive Bayes text classification to classic literature and job advertisements. Book excerpts represent an ideal scenario because there is no uncertainty on the correct classification of text. In this experiment, the overall accuracy exceeds 98% on excerpts of length 250 words. Increasing the size of the excerpts raises the accuracy despite shrinking the size of the training set.

Classification of job advertisements is a harder problem because of similarities in different job functions and also because job titles are not always applied correctly. Classification of four related technical roles yielded an overall accuracy of 91%. There was modest confusion, of order 10%, between job roles. If this experiment were repeated using unrelated job categories (e.g., administrative, engineering, finance, sales), the accuracy would be even higher.

The automated classification of job postings has potential applications. HR departments could classify their own job descriptions relative to a database of company/industry jobs in order to select the correct job title. Job aggregator websites might classify their job postings and make such value-added results searchable to users. Finally, employee resumes could be classified by a model trained on job descriptions to assess how well a candidate fits with the available roles.