Data scientists commonly interact with relational databases. Answering a typical business question (e.g., what are our projected sales?) could involve learning which databases and tables hold the relevant data, constructing the query to pull the result set, and then post-processing the data in SQL, a spreadsheet, or Python. Where significant post-processing or analysis is required, getting the data into Python can be a winning option given the breadth of available standard and third-party libraries. Sometimes, it is further necessary to upload the processed data or derived artifacts from Python back into the database for consumption by others.

Databases typically support Python through modules following the Python DB API interface (PEP 249). This API, in my opinion, is more suited for software engineers than pandas-centric data scientists or data analysts. More appropriately, pandas allows for reading to and writing from dataframes via SQLAlchemy. I have observed in professional settings that mixing and matching Python DB API code with pandas + SQLAlchemy creates a mess.

With a little creativity and foresight, it is possible to create a toolchain that is simultaneously simple, easy to use, and able to abstract away the details of using databases. In this article, I present my favorite way to interface with databases. An elegant twist on the pandas-SQLAlchemy API, it provides a drop-dead simple API and can slickly manage default connection settings. It can be readily extended to any database supported by SQLAlchemy. I build my case from examinations of the Python DB and the pandas-SQLAlchemy APIs. For an immediate look at my solution, please skip to Figure 11.

The Python DB API

Python PEP 249 outlines a low-level API to communicate with a generic database management system as described by computer science. It standardizes database connections, database transaction management (e.g., commits and rollbacks), and the operation of cursor objects. A database cursor is like an iterator object that handles row traversal as well as retrieval, insertion, and deletion.

Typical usage of the Python DB API involves the following steps:

- Load the package for a particular database (SQLite, MySQL, etc.).

- Establish a connection by specifying the relevant server, database name, database schema, and any required authentication credentials.

- Extract a cursor object from the connection.

- Run the query or SQL command with the cursor's execute method.

- If retrieving data, call one the of the cursor's fetch methods (e.g., fetch_all).

- Convert the result set into a Python dataframe.

|

| Figure 1: Reading data from MySQL using the Python DB API. |

Writing back to the database also involves the cursor object. If the table does not exist, it needs to be explicitly created from the cursor. One must then write and apply the insert statements through the cursor. Figure 2 shows an example for MySQL.

|

| Figure 2: Creating and writing a database table with a cursor object. |

The pandas-SQLAlchemy API

The Python DB API will likely feel unintuitive to data scientists and analysts lacking a significant computer science background. Data analysis in Python commonly incorporates pandas dataframes, and, luckily, the pandas project provides support for relational databases via SQLAlchemy. The pandas.read_sql function reads a query result set into a dataframe. The DataFrame.to_sql method pushes the contents of a dataframe to a database table. No cursor management or detailed understanding of database operation is required.

The most involved aspect of using a database with pandas is initially hooking the database up to SQLAlchemy. This configuration begins with the SQLAlchemy engine, which is aware of the type of database being used, where the database is located (e.g., web versus memory), and any authentication credentials. It also allocates resources (a connection pool) to manage database connections. Figure 3 defines an example engine for a MySQL database running on localhost. Note the database username and password are retrieved from the environment.

|

| Figure 3: Connecting to a MySQL database via SQLAlchemy. |

With the connection in hand, the pandas utilities are easy to use. Figure 4 repeats the same retrieval from Figure 1, with much less code.

|

| Figure 4: Using pandas.read_sql to query the database. |

Uploading to the database is likewise easier. Figure 5 re-implements the upload in Figure 2. Note that the data is formatted as a dataframe instead of insert statements.

|

| Figure 5: Writing a new database table with Dataframe.to_sql. |

Additionally, the SQLAlchemy engine allows for execution of SQL commands. A little more work, it involves getting a connection from the engine and converting the SQL statement into a SQLAlchemy text object. Figure 6 shows an example helper function and its usage. Note that the commit method is called after the command is executed. Following the Python DB API, the connection object does not assume the changes will be automatically committed (any auto-commit feature supported by the underlying database must be turned off by default).

|

| Figure 6: Executing SQL commands with SQLAlchemy. |

To simplify the pandas-SQLAlchemy API, one could write helper functions that abstract away the details of SQL read, write, execute operations. Figure 7 provides such an example. Note that the pandas functions support several important kwargs; any optional arguments are passed through to pandas.

|

| Figure 7: Helper functions for the pandas-SQLAlchemy API. |

These helpers are a reasonable way to engage with a database in Python. They are agnostic to the type of database since anything supported by SQLAlchemy is also supported. Less than ideal, however, is that the SQLAlchemy engine must be explicitly created and passed into the functions every time.

My dbio (database input/output) module

The next logical step is to represent a database connection as a custom class having 1) read, write, and execute methods and 2) a SQLAlchemy engine stored as an attribute. This design allows object methods to already have access to the engine, saving the user from repetitively passing in the engine with each call. For extra convenience, the SQLAlchemy engine is automatically created on initialization without the user having to remember the specific details for connecting to a particular database (e.g., Figure 3). I put this idea into practice through my own Python package called dbio (database input/output).

There were two important design choices I made. First, I built a base class called _Connector that defines the read, write, and execute methods. These methods are similar to the helper functions in Figure 7 but omit the function argument for the engine; they instead reference the engine attribute of the class. The other difference is that the read method optionally performs a memory optimization procedure called downcast that shrinks data to the smallest possible datatype while preserving all the information. For example, a 64-bit integer (int64) might convert to int16 without any downside. In my experience, the biggest memory savings comes from converting strings to the pandas categorical type.

The constructor of the base class needs to be aware of the database server, default database name, and default schema; these are set as object attributes. Figure 8 shows how the base class initializes.

|

| Figure 8: The base class in dbio. Not pictured: the read, write, and execute methods. |

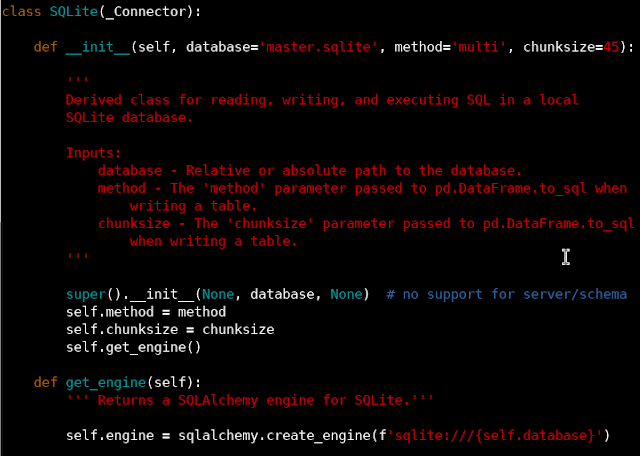

The second important design decision was that supported databases each have their own derived class that inherits read, write, and execute from the base class. The constructor for the derived class is aware of all the parameters needed to get the database connection and sets sensible defaults; on initialization, the server, default database, and default schema are passed to the base class constructor (super().__init__). Each derived class also contains a get_engine method that sets up the SQLAlchemy connection given the input parameters; note that get_engine is called automatically by the constructor.

Figure 9 shows the subclass for a SQLite connector. Note that with SQLite there is no database server or schema (the database is a file).

|

| Figure 9: The SQLite derived class. |

The derived class for MySQL (Figure 10) is slightly more complicated. In addition to the database server, the username and password credentials must also be handled. Accordingly, the get_engine method takes more arguments. Note that MySQL also does not support a schema.

|

| Figure 10: The MySQL derived class. |

Finally, Figure 11 shows how the MySQL database operations look when they are done through a dbio connection object (cobj). This version is significantly shorter because of all the boiler plate code abstracted away by dbio. With this tool, a data scientist can focus more acutely on the project without sacrificing any ability to interact with a database.

|

| Figure 11: Sample usage of dbio. The connection object (cobj) provides the read, write, and execute methods. |

Building a Python package

For ease of re-use and portability, I have created a dbio Python package and installed it on my own system in a virtual environment. The current standard for creating a package is to use a pyproject.toml file. This configuration file defines the name of the package, version, dependencies, and other details such as how the package will be built. Figure 12 shows the settings for dbio. Note that I'm using setuptools. Also, the package version is set dynamically from an __init__.py file.

|

| Figure 12: The pyproject.toml file for the dbio project. |

Final remarks

Dealing with databases in Python is a lot more fun with dbio! To date, I have written three versions of dbio. The one described here is my own personal version (and is available upon request). Other versions I have written professionally support SQL Server, Snowflake, and Denodo. Using dbio with an unsupported database does not require modifying the package. One could import the _Connector base class and write their own local module for any other database.

Certain customizations to dbio may make sense depending on the details of the development/production environment. Regarding database parameters, for example, the default database and schema could be set to whatever is appropriate for the broader team. If warranted, a derived database class need not even depend on SQLAlchemy. Snowflake provides its own support for pandas dataframes that may in some cases be superior to going through SQLAlchemy. In this case, the read, write, and execute methods can be defined in terms of the vendor-provided tools without changing the dbio API.

In contrast to the MySQL connector presented here, where the user is responsible for providing the credentials, authentication may be carefully automated. For example, when a team is operating with a common 'service account', the account name could be set as a default parameter in the database connector, and the corresponding password (or whatever) could be securely retrieved from, e.g., a vault. Some vendors, such as Snowflake, support single sign-on through a web browser. In this case, the username could be automatically retrieved through the environment, and the browser authentication could be triggered automatically as part of the get_engine method.

No comments:

Post a Comment